Unlocking the Potential of AI Transformers and Attention Mechanisms in Chemical Identification using Raman Spectroscopy

Naming is everything. Once it is named, it is no longer unknown. Once it is known, it can now exist.

"And out of the ground, the LORD God formed every beast of the field, and every fowl of the air, and brought them unto Adam to see what he would call them: and whatsoever Adam called every living creature, that was the name thereof. And Adam gave names to all cattle, and to the fowl of the air, and to every beast of the field"

(Genesis 2:18)

Artificial Intelligence (AI) has witnessed remarkable advancements in recent years, and one of the key contributors to this progress is the development of AI Transformers and attention mechanisms. These technologies have revolutionized various fields, including natural language processing, computer vision, and machine translation. In this article, we will delve into the world of AI Transformers and their attention mechanisms, uncovering their potential, applications, and impact on the future of AI.

Understanding AI Transformers

AI Transformers, inspired by the attention mechanism in human cognition, are deep learning models that excel at capturing complex relationships in data sequences. They are based on the Transformer architecture, first introduced by Vaswani et al. in 2017, and have since become a fundamental tool for many AI applications. Unlike traditional sequential models such as recurrent neural networks (RNNs) that process data sequentially, Transformers can process the entire sequence simultaneously, making them highly parallelizable and efficient. This parallel processing ability makes Transformers particularly adept at handling long-range dependencies and capturing global context, leading to superior performance in tasks involving sequential data.

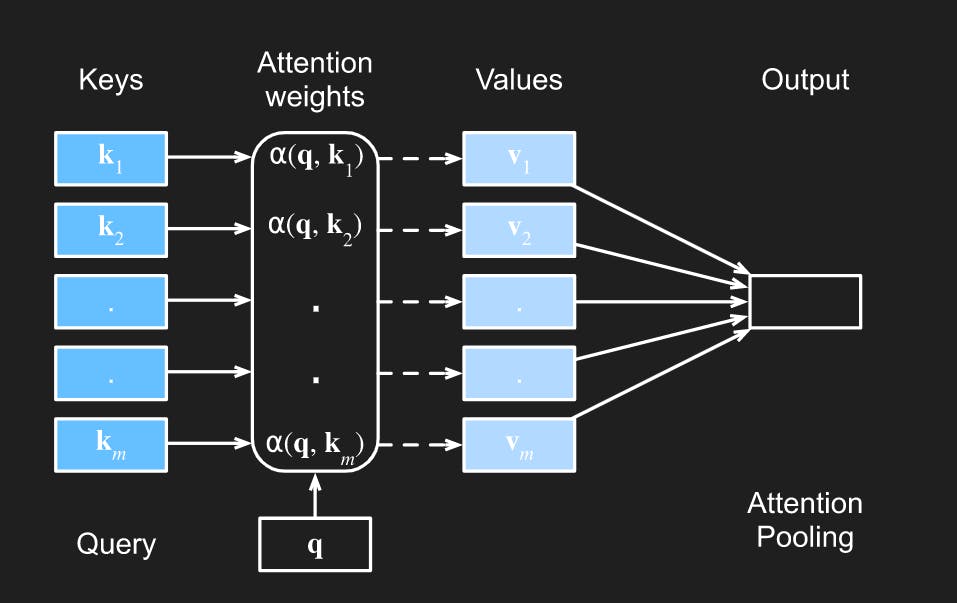

At the heart of AI Transformers lies the attention mechanism, a crucial component responsible for capturing relevant information from input sequences. Attention mechanisms allow the model to assign varying levels of importance to different parts of the input when making predictions or generating output. The attention mechanism can be thought of as a spotlight that focuses on specific parts of the input, allowing the model to weigh the importance of each element in the sequence. This enables the Transformer to dynamically attend to relevant information, considering both local and global context, and produce more accurate and contextually rich representations.

Figure 1: The attention mechanism computes a linear combination of values v_i via attention pooling, where weights are derived according to the compatibility between a query q and keys k_i.

A core aspect of attention mechanisms is self-attention, also known as intra-attention or scaled dot-product attention. Self-attention allows the Transformer model to attend to different parts of the input sequence simultaneously, regardless of their position. This mechanism calculates attention scores for each pair of elements in the sequence, capturing the relationships and dependencies among them. By considering these attention scores, the Transformer assigns weights to the elements in the sequence. The weighted sum of these elements, commonly referred to as the attention output or context vector, represents the learned representation of the input. Self-attention thus enables Transformers to capture the relevance of every element within a sequence, improving the model's ability to process and understand complex patterns.

Natural Language Processing: AI Transformers with attention mechanisms have significantly advanced the field of natural language processing (NLP). Models such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) have achieved state-of-the-art performance in tasks like language translation, sentiment analysis, question-answering, and text summarization.

Computer Vision: Attention mechanisms have been successfully applied to computer vision tasks, such as image captioning and object detection. By allowing the model to focus on relevant regions of an image, attention mechanisms improve the accuracy of predictions and enhance the interpretability of the model's decision-making process.

Recommendation Systems: AI Transformers with attention mechanisms have been utilized in recommendation systems to capture user preferences and improve personalized recommendations. These models can effectively analyze user behaviour sequences and dynamically attend to relevant interactions, resulting in more accurate and context-aware recommendations.

Raman spectroscopy is a powerful analytical technique used to identify and characterize chemical compounds based on their molecular vibrations. With advancements in AI Transformers and attention mechanisms, the potential to leverage these technologies for chemical identification has grown exponentially.

The Power of Raman Spectroscopy

Raman spectroscopy provides valuable insights into the molecular composition and structure of chemical compounds. It involves shining a laser on a sample, which interacts with the molecular bonds, leading to shifts in the energy levels of scattered photons. By analyzing the resulting Raman spectra, researchers can identify the unique vibrational fingerprints of different chemical compounds, enabling rapid and non-destructive identification.

To date, there is a lack of universally accepted protocols for analyzing Raman spectra. However, a recent publication in Nature Protocols titled "Chemometric analysis in Raman spectroscopy from experimental design to machine learning–based modelling" by Bocklitz and colleagues offers valuable guidance in this area. For the first time, the paper outlines a comprehensive framework for analyzing Raman spectra, encompassing various stages such as experimental design, data preparation, data modelling, and statistical analysis. Moreover, the authors highlight possible challenges that may arise during the analysis process and provide recommendations on how to overcome them.

AI Transformers, with their ability to process and understand complex sequential data, along with attention mechanisms, which capture relevant information from input sequences, offer immense potential for chemical identification using Raman spectroscopy.

Building a Large Training Dataset: With millions of Raman spectra available from various sources, it is possible to construct an extensive training dataset. These spectra can include compounds with known identities as well as unidentified or unnamed samples. This dataset serves as the foundation for training the AI Transformer model.

Extracting Relevant Information: Attention mechanisms within the AI Transformer model allow for the extraction of the most pertinent features from the Raman spectra. The model can dynamically attend to critical regions of the spectrum that hold the key to distinguishing different chemical compounds. This enables the model to capture the essential vibrational patterns and relationships between different molecular bonds.

Training the Model: The AI Transformer model is trained on the large dataset of Raman spectra, with attention mechanisms guiding the model's focus on the most relevant parts of the spectra. The model learns to associate specific spectral features with known compound names, creating a powerful mapping between the Raman spectra and their corresponding chemical identities.

Naming Unknown Samples: Once the AI Transformer model is trained, it can be used to identify and name unknown samples based on their Raman spectra. The model applies attention mechanisms to focus on the relevant features of the spectra, comparing them to the learned patterns in the training dataset. By leveraging the relationships learned during training, the model can assign the most likely name or class to the unknown sample.

Figure 2: Schematic of processing data Raman methods by deep learning and artificial intelligence.

Advantages and Potential Applications

The use of AI Transformers and attention mechanisms in chemical identification using Raman spectroscopy offers several advantages:

Rapid and Accurate Identification: The AI model can quickly process large volumes of Raman spectra and provide accurate identification of chemical compounds. This accelerates the identification process, saving time and resources.

Name Unidentified Samples: The AI model has the potential to name chemical compounds that have not been previously identified or named. By leveraging the learned patterns from the training dataset, the model can infer the closest matching compound or provide insights into the chemical structure.

Enhance Database and Knowledge: The AI model's predictions can be used to expand chemical databases, improving the accuracy and completeness of existing repositories. Additionally, the model's insights can contribute to chemical knowledge, aiding in the discovery and understanding of new compounds.

However, companies specialising in Raman spectroscopy are unwilling to simply hand over their Raman data to the public, especially if the hours necessary to collect such data are spent on the foundations.

The integration of AI Transformers and attention mechanisms into chemical identification using Raman spectroscopy presents a paradigm shift in the field. By leveraging the power of attention mechanisms to extract relevant information from large training datasets, these models can rapidly and accurately identify chemical compounds, including previously unidentified samples. The potential to name unknown chemicals and expand chemical knowledge opens up new possibilities for research, discovery, and practical applications in various industries, including pharmaceuticals, materials science, and forensics.